sources

Powered by Perlanet

The team explore one of the newest areas of modern astronomy, the search for exoplanets, the distant worlds that orbit stars beyond our own solar system.

The team explore one of the newest areas of modern astronomy, the search for exoplanets, the distant worlds that orbit stars beyond our own solar system.  Bliss and Book's investigation into Harkup's death continues as Book discovers some surprising evidence in the case of the bombsite skeletons.

Bliss and Book's investigation into Harkup's death continues as Book discovers some surprising evidence in the case of the bombsite skeletons.  In 1989, Daniel is left reeling and heartbroken at Alison's sudden disappearance. In 2015, a surprise betrayal takes Daniel by surprise, but he has to fight for what he loves.

In 1989, Daniel is left reeling and heartbroken at Alison's sudden disappearance. In 2015, a surprise betrayal takes Daniel by surprise, but he has to fight for what he loves.  In 1989, Alison's home life goes from bad to worse as she takes on caring for Peter. In 2015, Daniel struggles with Alison's revelations of what occurred during their relationship twenty years ago.

In 1989, Alison's home life goes from bad to worse as she takes on caring for Peter. In 2015, Daniel struggles with Alison's revelations of what occurred during their relationship twenty years ago.

Watched on Sunday July 13, 2025.

Watched on Saturday July 12, 2025.

Watched on Friday July 11, 2025.

Watched on Tuesday July 8, 2025.

Watched on Sunday July 6, 2025.

Recently, Gabor ran a poll in a Perl Facebook community asking which version of Perl people used in their production systems. The results were eye-opening—and not in a good way. A surprisingly large number of developers replied with something along the lines of “whatever version is included with my OS.”

If that’s you, this post is for you. I don’t say that to shame or scold—many of us started out this way. But if you’re serious about writing and running Perl in 2025, it’s time to stop relying on the system Perl.

Let’s unpack why.

What is “System Perl”?

When we talk about the system Perl, we mean the version of Perl that comes pre-installed with your operating system—be it a Linux distro like Debian or CentOS, or even macOS. This is the version used by the OS itself for various internal tasks and scripts. It’s typically located in /usr/bin/perl and tied closely to system packages.

It’s tempting to just use what’s already there. But that decision brings a lot of hidden baggage—and some very real risks.

Which versions of Perl are officially supported?

The Perl Core Support Policy states that only the two most recent stable release series of Perl are supported by the Perl development team [Update: fixed text in previous sentence]. As of mid-2025, that means:

-

Perl 5.40 (released May 2024)

-

Perl 5.38 (released July 2023)

If you’re using anything older—like 5.36, 5.32, or 5.16—you’re outside the officially supported window. That means no guaranteed bug fixes, security patches, or compatibility updates from core CPAN tools like ExtUtils::MakeMaker, Module::Build, or Test::More.

Using an old system Perl often means you’re several versions behind, and no one upstream is responsible for keeping that working anymore.

Why using System Perl is a problem

1. It’s often outdated

System Perl is frozen in time—usually the version that was current when the OS release cycle began. Depending on your distro, that could mean Perl 5.10, 5.16, or 5.26—versions that are years behind the latest stable Perl (currently 5.40).

This means you’re missing out on:

-

New language features (

builtin,class/method/field,signatures,try/catch) -

Performance improvements

-

Bug fixes

-

Critical security patches

- Support: anything older than Perl 5.38 is no longer officially maintained by the core Perl team

If you’ve ever looked at modern Perl documentation and found your code mysteriously breaking, chances are your system Perl is too old.

2. It’s not yours to mess with

System Perl isn’t just a convenience—it’s a dependency. Your operating system relies on it for package management, system maintenance tasks, and assorted glue scripts. If you install or upgrade CPAN modules into the system Perl (especially with cpan or cpanm as root), you run the risk of breaking something your OS depends on.

It’s a kind of dependency hell that’s completely avoidable—if you stop using system Perl.

3. It’s a barrier to portability and reproducibility

When you use system Perl, your environment is essentially defined by your distro. That’s fine until you want to:

-

Move your application to another system

-

Run CI tests on a different platform

-

Upgrade your OS

-

Onboard a new developer

You lose the ability to create predictable, portable environments. That’s not a luxury—it’s a requirement for sane development in modern software teams.

What you should be doing instead

1. Use perlbrew or plenv

These tools let you install multiple versions of Perl in your home directory and switch between them easily. Want to test your code on Perl 5.32 and 5.40? perlbrew makes it a breeze.

You get:

-

A clean separation from system Perl

-

The freedom to upgrade or downgrade at will

-

Zero risk of breaking your OS

It takes minutes to set up and pays for itself tenfold in flexibility.

2. Use local::lib or Carton

Managing CPAN dependencies globally is a recipe for pain. Instead, use:

-

local::lib: keeps modules in your home directory. -

Carton: locks your CPAN dependencies (likenpmorpip) so deployments are repeatable.

Your production system should run with exactly the same modules and versions as your dev environment. Carton helps you achieve that.

3. Consider Docker

If you’re building larger apps or APIs, containerising your Perl environment ensures true consistency across dev, test, and production. You can even start from a system Perl inside the container—as long as it’s isolated and under your control.

You never want to be the person debugging a bug that only happens on production, because prod is using the distro’s ancient Perl and no one can remember which CPAN modules got installed by hand.

The benefits of managing your own Perl

Once you step away from the system Perl, you gain:

-

Access to the full language. Use the latest features without backports or compatibility hacks.

-

Freedom from fear. Install CPAN modules freely without the risk of breaking your OS.

-

Portability. Move projects between machines or teams with minimal friction.

-

Better testing. Easily test your code across multiple Perl versions.

-

Security. Stay up to date with patches and fixes on your schedule, not the distro’s.

-

Modern practices. Align your Perl workflow with the kinds of practices standard in other languages (think

virtualenv,rbenv,nvm, etc.).

“But it just works…”

I know the argument. You’ve got a handful of scripts, or maybe a cron job or two, and they seem fine. Why bother with all this?

Because “it just works” only holds true until:

-

You upgrade your OS and Perl changes under you.

-

A script stops working and you don’t know why.

-

You want to install a module and suddenly

aptis yelling at you about conflicts. -

You realise the module you need requires Perl 5.34, but your system has 5.16.

Don’t wait for it to break. Get ahead of it.

The first step

You don’t have to refactor your entire setup overnight. But you can do this:

-

Install

perlbrewand try it out. -

Start a new project with

Cartonto lock dependencies. -

Choose a current version of Perl and commit to using it moving forward.

Once you’ve seen how smooth things can be with a clean, controlled Perl environment, you won’t want to go back.

TL;DR

Your system Perl is for your operating system—not for your apps. Treat it as off-limits. Modern Perl deserves modern tools, and so do you.

Take the first step. Your future self (and probably your ops team) will thank you.

The post Stop using your system Perl first appeared on Perl Hacks.

Recently, Gabor ran a poll in a Perl Facebook community asking which version of Perl people used in their production systems. The results were eye-opening—and not in a good way. A surprisingly large number of developers replied with something along the lines of “whatever version is included with my OS.”

If that’s you, this post is for you. I don’t say that to shame or scold—many of us started out this way. But if you’re serious about writing and running Perl in 2025, it’s time to stop relying on the system Perl.

Let’s unpack why.

What is “System Perl”?

When we talk about the system Perl, we mean the version of Perl that comes pre-installed with your operating system—be it a Linux distro like Debian or CentOS, or even macOS. This is the version used by the OS itself for various internal tasks and scripts. It’s typically located in /usr/bin/perl and tied closely to system packages.

It’s tempting to just use what’s already there. But that decision brings a lot of hidden baggage—and some very real risks.

Which versions of Perl are officially supported?

The Perl Core Support Policy states that only the two most recent stable release series of Perl are supported by the Perl development team [Update: fixed text in previous sentence]. As of mid-2025, that means:

Perl 5.40 (released May 2024)

Perl 5.38 (released July 2023)

If you’re using anything older—like 5.36, 5.32, or 5.16—you’re outside the officially supported window. That means no guaranteed bug fixes, security patches, or compatibility updates from core CPAN tools like ExtUtils::MakeMaker, Module::Build, or Test::More.

Using an old system Perl often means you’re several versions behind , and no one upstream is responsible for keeping that working anymore.

Why using System Perl is a problem

1. It’s often outdated

System Perl is frozen in time—usually the version that was current when the OS release cycle began. Depending on your distro, that could mean Perl 5.10, 5.16, or 5.26—versions that are years behind the latest stable Perl (currently 5.40).

This means you’re missing out on:

New language features (

builtin,class/method/field,signatures,try/catch)Performance improvements

Bug fixes

Critical security patches

Support: anything older than Perl 5.38 is no longer officially maintained by the core Perl team

If you’ve ever looked at modern Perl documentation and found your code mysteriously breaking, chances are your system Perl is too old.

2. It’s not yours to mess with

System Perl isn’t just a convenience—it’s a dependency. Your operating system relies on it for package management, system maintenance tasks, and assorted glue scripts. If you install or upgrade CPAN modules into the system Perl (especially with cpan or cpanm as root), you run the risk of breaking something your OS depends on.

It’s a kind of dependency hell that’s completely avoidable— if you stop using system Perl.

3. It’s a barrier to portability and reproducibility

When you use system Perl, your environment is essentially defined by your distro. That’s fine until you want to:

Move your application to another system

Run CI tests on a different platform

Upgrade your OS

Onboard a new developer

You lose the ability to create predictable, portable environments. That’s not a luxury— it’s a requirement for sane development in modern software teams.

What you should be doing instead

1. Use perlbrew or plenv

These tools let you install multiple versions of Perl in your home directory and switch between them easily. Want to test your code on Perl 5.32 and 5.40? perlbrew makes it a breeze.

You get:

A clean separation from system Perl

The freedom to upgrade or downgrade at will

Zero risk of breaking your OS

It takes minutes to set up and pays for itself tenfold in flexibility.

2. Use local::lib or Carton

Managing CPAN dependencies globally is a recipe for pain. Instead, use:

local::lib: keeps modules in your home directory.Carton: locks your CPAN dependencies (likenpmorpip) so deployments are repeatable.

Your production system should run with exactly the same modules and versions as your dev environment. Carton helps you achieve that.

3. Consider Docker

If you’re building larger apps or APIs, containerising your Perl environment ensures true consistency across dev, test, and production. You can even start from a system Perl inside the container—as long as it’s isolated and under your control.

You never want to be the person debugging a bug that only happens on production, because prod is using the distro’s ancient Perl and no one can remember which CPAN modules got installed by hand.

The benefits of managing your own Perl

Once you step away from the system Perl, you gain:

Access to the full language. Use the latest features without backports or compatibility hacks.

Freedom from fear. Install CPAN modules freely without the risk of breaking your OS.

Portability. Move projects between machines or teams with minimal friction.

Better testing. Easily test your code across multiple Perl versions.

Security. Stay up to date with patches and fixes on your schedule, not the distro’s.

Modern practices. Align your Perl workflow with the kinds of practices standard in other languages (think

virtualenv,rbenv,nvm, etc.).

“But it just works…”

I know the argument. You’ve got a handful of scripts, or maybe a cron job or two, and they seem fine. Why bother with all this?

Because “it just works” only holds true until:

You upgrade your OS and Perl changes under you.

A script stops working and you don’t know why.

You want to install a module and suddenly

aptis yelling at you about conflicts.You realise the module you need requires Perl 5.34, but your system has 5.16.

Don’t wait for it to break. Get ahead of it.

The first step

You don’t have to refactor your entire setup overnight. But you can do this:

Install

perlbrewand try it out.Start a new project with

Cartonto lock dependencies.Choose a current version of Perl and commit to using it moving forward.

Once you’ve seen how smooth things can be with a clean, controlled Perl environment, you won’t want to go back.

TL;DR

Your system Perl is for your operating system—not for your apps. Treat it as off-limits. Modern Perl deserves modern tools, and so do you.

Take the first step. Your future self (and probably your ops team) will thank you.

The post Stop using your system Perl first appeared on Perl Hacks.

Earlier this week, I read a post from someone who failed a job interview because they used a hash slice in some sample code and the interviewer didn’t believe it would work.

That’s not just wrong — it’s a teachable moment. Perl has several kinds of slices, and they’re all powerful tools for writing expressive, concise, idiomatic code. If you’re not familiar with them, you’re missing out on one of Perl’s secret superpowers.

In this post, I’ll walk through all the main types of slices in Perl — from the basics to the modern conveniences added in recent versions — using a consistent, real-world-ish example. Whether you’re new to slices or already slinging %hash{...} like a pro, I hope you’ll find something useful here.

The Scenario

Let’s imagine you’re writing code to manage employees in a company. You’ve got an array of employee names and a hash of employee details.

my @employees = qw(alice bob carol dave eve); my %details = ( alice => 'Engineering', bob => 'Marketing', carol => 'HR', dave => 'Engineering', eve => 'Sales', );

We’ll use these throughout to demonstrate each kind of slice.

1. List Slices

List slices are slices from a literal list. They let you pick multiple values from a list in a single operation:

my @subset = (qw(alice bob carol dave eve))[1, 3];

# @subset = ('bob', 'dave')You can also destructure directly:

my ($employee1, $employee2) = (qw(alice bob carol))[0, 2]; # $employee1 = 'alice', $employee2 = 'carol'

Simple, readable, and no loop required.

2. Array Slices

Array slices are just like list slices, but from an array variable:

my @subset = @employees[0, 2, 4];

# @subset = ('alice', 'carol', 'eve')You can also assign into an array slice to update multiple elements:

@employees[1, 3] = ('beatrice', 'daniel');

# @employees = ('alice', 'beatrice', 'carol', 'daniel', 'eve')Handy for bulk updates without writing explicit loops.

3. Hash Slices

This is where some people start to raise eyebrows — but hash slices are perfectly valid Perl and incredibly useful.

Let’s grab departments for a few employees:

my @departments = @details{'alice', 'carol', 'eve'};

# @departments = ('Engineering', 'HR', 'Sales')The @ sigil here indicates that we’re asking for a list of values, even though %details is a hash.

You can assign into a hash slice just as easily:

@details{'bob', 'carol'} = ('Support', 'Legal');This kind of bulk update is especially useful when processing structured data or transforming API responses.

4. Index/Value Array Slices (Perl 5.20+)

Starting in Perl 5.20, you can use %array[...] to return index/value pairs — a very elegant way to extract and preserve positions in a single step.

my @indexed = %employees[1, 3]; # @indexed = (1 => 'bob', 3 => 'dave')

You get a flat list of index/value pairs. This is particularly helpful when mapping or reordering data based on array positions.

You can even delete from an array this way:

my @removed = delete %employees[0, 4]; # @removed = (0 => 'alice', 4 => 'eve')

And afterwards you’ll have this:

# @employees = (undef, 'bob', 'carol', 'dave', undef)

5. Key/Value Hash Slices (Perl 5.20+)

The final type of slice — also added in Perl 5.20 — is the %hash{...} key/value slice. This returns a flat list of key/value pairs, perfect for passing to functions that expect key/value lists.

my @kv = %details{'alice', 'dave'};

# @kv = ('alice', 'Engineering', 'dave', 'Engineering')You can construct a new hash from this easily:

my %engineering = (%details{'alice', 'dave'});This avoids intermediate looping and makes your code clear and declarative.

Summary: Five Flavours of Slice

| Type | Syntax | Returns | Added in |

|---|---|---|---|

| List slice | (list)[@indices] |

Values | Ancient |

| Array slice | @array[@indices] |

Values | Ancient |

| Hash slice | @hash{@keys} |

Values | Ancient |

| Index/value array slice | %array[@indices] |

Index-value pairs | Perl 5.20 |

| Key/value hash slice | %hash{@keys} |

Key-value pairs | Perl 5.20 |

Final Thoughts

If someone tells you that @hash{...} or %array[...] doesn’t work — they’re either out of date or mistaken. These forms are standard, powerful, and idiomatic Perl.

Slices make your code cleaner, clearer, and more concise. They let you express what you want directly, without boilerplate. And yes — they’re perfectly interview-appropriate.

So next time you’re reaching for a loop to pluck a few values from a hash or an array, pause and ask: could this be a slice?

If the answer’s yes — go ahead and slice away.

The post A Slice of Perl first appeared on Perl Hacks.

Earlier this week, I read a post from someone who failed a job interview because they used a hash slice in some sample code and the interviewer didn’t believe it would work.

That’s not just wrong — it’s a teachable moment. Perl has several kinds of slices, and they’re all powerful tools for writing expressive, concise, idiomatic code. If you’re not familiar with them, you’re missing out on one of Perl’s secret superpowers.

In this post, I’ll walk through all the main types of slices in Perl — from the basics to the modern conveniences added in recent versions — using a consistent, real-world-ish example. Whether you’re new to slices or already slinging %hash{...} like a pro, I hope you’ll find something useful here.

The Scenario

Let’s imagine you’re writing code to manage employees in a company. You’ve got an array of employee names and a hash of employee details.

my @employees = qw(alice bob carol dave eve);

my %details = (

alice => 'Engineering',

bob => 'Marketing',

carol => 'HR',

dave => 'Engineering',

eve => 'Sales',

);

We’ll use these throughout to demonstrate each kind of slice.

1. List Slices

List slices are slices from a literal list. They let you pick multiple values from a list in a single operation:

my @subset = (qw(alice bob carol dave eve))[1, 3];

# @subset = ('bob', 'dave')

You can also destructure directly:

my ($employee1, $employee2) = (qw(alice bob carol))[0, 2];

# $employee1 = 'alice', $employee2 = 'carol'

Simple, readable, and no loop required.

2. Array Slices

Array slices are just like list slices, but from an array variable:

my @subset = @employees[0, 2, 4];

# @subset = ('alice', 'carol', 'eve')

You can also assign into an array slice to update multiple elements:

@employees[1, 3] = ('beatrice', 'daniel');

# @employees = ('alice', 'beatrice', 'carol', 'daniel', 'eve')

Handy for bulk updates without writing explicit loops.

3. Hash Slices

This is where some people start to raise eyebrows — but hash slices are perfectly valid Perl and incredibly useful.

Let’s grab departments for a few employees:

my @departments = @details{'alice', 'carol', 'eve'};

# @departments = ('Engineering', 'HR', 'Sales')

The @ sigil here indicates that we’re asking for a list of values, even though %details is a hash.

You can assign into a hash slice just as easily:

@details{'bob', 'carol'} = ('Support', 'Legal');

This kind of bulk update is especially useful when processing structured data or transforming API responses.

4. Index/Value Array Slices (Perl 5.20+)

Starting in Perl 5.20 , you can use %array[...] to return index/value pairs — a very elegant way to extract and preserve positions in a single step.

my @indexed = %employees[1, 3];

# @indexed = (1 => 'bob', 3 => 'dave')

You get a flat list of index/value pairs. This is particularly helpful when mapping or reordering data based on array positions.

You can even delete from an array this way:

my @removed = delete %employees[0, 4];

# @removed = (0 => 'alice', 4 => 'eve')

And afterwards you’ll have this:

# @employees = (undef, 'bob', 'carol', 'dave', undef)

5. Key/Value Hash Slices (Perl 5.20+)

The final type of slice — also added in Perl 5.20 — is the %hash{...} key/value slice. This returns a flat list of key/value pairs, perfect for passing to functions that expect key/value lists.

my @kv = %details{'alice', 'dave'};

# @kv = ('alice', 'Engineering', 'dave', 'Engineering')

You can construct a new hash from this easily:

my %engineering = (%details{'alice', 'dave'});

This avoids intermediate looping and makes your code clear and declarative.

Summary: Five Flavours of Slice

| Type | Syntax | Returns | Added in |

|---|---|---|---|

| List slice | (list)[@indices] |

Values | Ancient |

| Array slice | @array[@indices] |

Values | Ancient |

| Hash slice | @hash{@keys} |

Values | Ancient |

| Index/value array slice | %array[@indices] |

Index-value pairs | Perl 5.20 |

| Key/value hash slice | %hash{@keys} |

Key-value pairs | Perl 5.20 |

Final Thoughts

If someone tells you that @hash{...} or %array[...] doesn’t work — they’re either out of date or mistaken. These forms are standard, powerful, and idiomatic Perl.

Slices make your code cleaner, clearer, and more concise. They let you express what you want directly, without boilerplate. And yes — they’re perfectly interview-appropriate.

So next time you’re reaching for a loop to pluck a few values from a hash or an array, pause and ask: could this be a slice?

If the answer’s yes — go ahead and slice away.

The post A Slice of Perl first appeared on Perl Hacks.

Back in January, I wrote a blog post about adding JSON-LD to your web pages to make it easier for Google to understand what they were about. The example I used was my ReadABooker site, which encourages people to read more Booker Prize shortlisted novels (and to do so by buying them using my Amazon Associate links).

I’m slightly sad to report that in the five months since I implemented that change, visits to the website have remained pretty much static and I have yet to make my fortune from Amazon kickbacks. But that’s ok, we just use it as an excuse to learn more about SEO and to apply more tweaks to the website.

I’ve been using the most excellent ARefs site to get information about how good the on-page SEO is for many of my sites. Every couple of weeks, ARefs crawls the site and will give me a list of suggestions of things I can improve. And for a long time, I had been putting off dealing with one of the biggest issues – because it seemed so difficult.

The site didn’t have enough text on it. You could get lists of Booker years, authors and books. And, eventually, you’d end up on a book page where, hopefully, you’d be tempted to buy a book. But the book pages were pretty bare – just the title, author, year they were short-listed and an image of the cover. Oh, and the all-important “Buy from Amazon” button. AHrefs was insistent that I needed more text (at least a hundred words) on a page in order for Google to take an interest in it. And given that my database of Booker books included hundreds of books by hundreds of authors, that seemed like a big job to take on.

But, a few days ago, I saw a solution to that problem – I could ask ChatGPT for the text.

I wrote a blog post in April about generating a daily-updating website using ChatGPT. This would be similar, but instead of writing the text directly to a Jekyll website, I’d write it to the database and add it to the templates that generate the website.

Adapting the code was very quick. Here’s the finished version for the book blurbs.

#!/usr/bin/env perl

use strict;

use warnings;

use builtin qw[trim];

use feature 'say';

use OpenAPI::Client::OpenAI;

use Time::Piece;

use Encode qw[encode];

use Booker::Schema;

my $sch = Booker::Schema->get_schema;

my $count = 0;

my $books = $sch->resultset('Book');

while ($count < 20 and my $book = $books->next) {

next if defined $book->blurb;

++$count;

my $blurb = describe_title($book);

$book->update({ blurb => $blurb });

}

sub describe_title {

my ($book) = @_;

my ($title, $author) = ($book->title, $book->author->name);

my $debug = 1;

my $api_key = $ENV{"OPENAI_API_KEY"} or die "OPENAI_API_KEY is not set\n";

my $client = OpenAPI::Client::OpenAI->new;

my $prompt = join " ",

'Produce a 100-200 word description for the book',

"'$title' by $author",

'Do not mention the fact that the book was short-listed for (or won)',

'the Booker Prize';

my $res = $client->createChatCompletion({

body => {

model => 'gpt-4o',

# model => 'gpt-4.1-nano',

messages => [

{ role => 'system', content => 'You are someone who knows a lot about popular literature.' },

{ role => 'user', content => $prompt },

],

temperature => 1.0,

},

});

my $text = $res->res->json->{choices}[0]{message}{content};

$text = encode('UTF-8', $text);

say $text if $debug;

return $text;

}There are a couple of points to note:

- I have DBIC classes to deal with the database interaction, so that’s all really simple. Before running this code, I added new columns to the relevant tables and re-ran my process for generating the DBIC classes

- I put a throttle on the processing, so each run would only update twenty books – I slightly paranoid about using too many requests and annoying OpenAI. That wasn’t a problem at all

- The hardest thing (not that it was very hard at all) was to tweak the prompt to give me exactly what I wanted

I then produced a similar program that did the same thing for authors. It’s similar enough that the next time I need something like this, I’ll spend some time turning it into a generic program.

I then added the new database fields to the book and author templates and re-published the site. You can see the results in, for example, the pages for Salman Rushie and Midnight’s Children.

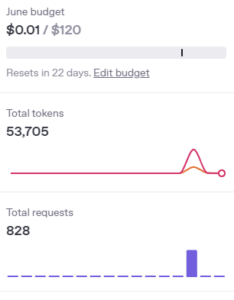

I had one more slight concern going into this project. I pay for access to the ChatGPT API. I usually have about $10 in my pre-paid account and I really had no idea how much this was going to cost me. I needed have worried. Here’s a graph showing the bump in my API usage on the day I ran the code for all books and authors:

But you can also see that my total costs for the month so far are $0.01!

So, all-in-all, I call that a success and I’ll be using similar techniques to generate content for some other websites.

The post Generating Content with ChatGPT first appeared on Perl Hacks.

Back in January, I wrote a blog post about adding JSON-LD to your web pages to make it easier for Google to understand what they were about. The example I used was my ReadABooker site, which encourages people to read more Booker Prize shortlisted novels (and to do so by buying them using my Amazon Associate links).

I’m slightly sad to report that in the five months since I implemented that change, visits to the website have remained pretty much static and I have yet to make my fortune from Amazon kickbacks. But that’s ok, we just use it as an excuse to learn more about SEO and to apply more tweaks to the website.

I’ve been using the most excellent ARefs site to get information about how good the on-page SEO is for many of my sites. Every couple of weeks, ARefs crawls the site and will give me a list of suggestions of things I can improve. And for a long time, I had been putting off dealing with one of the biggest issues – because it seemed so difficult.

The site didn’t have enough text on it. You could get lists of Booker years, authors and books. And, eventually, you’d end up on a book page where, hopefully, you’d be tempted to buy a book. But the book pages were pretty bare – just the title, author, year they were short-listed and an image of the cover. Oh, and the all-important “Buy from Amazon” button. AHrefs was insistent that I needed more text (at least a hundred words) on a page in order for Google to take an interest in it. And given that my database of Booker books included hundreds of books by hundreds of authors, that seemed like a big job to take on.

But, a few days ago, I saw a solution to that problem – I could ask ChatGPT for the text.

I wrote a blog post in April about generating a daily-updating website using ChatGPT. This would be similar, but instead of writing the text directly to a Jekyll website, I’d write it to the database and add it to the templates that generate the website.

Adapting the code was very quick. Here’s the finished version for the book blurbs.

#!/usr/bin/env perl

use strict;

use warnings;

use builtin qw[trim];

use feature 'say';

use OpenAPI::Client::OpenAI;

use Time::Piece;

use Encode qw[encode];

use Booker::Schema;

my $sch = Booker::Schema->get_schema;

my $count = 0;

my $books = $sch->resultset('Book');

while ($count < 20 and my $book = $books->next) {

next if defined $book->blurb;

++$count;

my $blurb = describe_title($book);

$book->update({ blurb => $blurb });

}

sub describe_title {

my ($book) = @_;

my ($title, $author) = ($book->title, $book->author->name);

my $debug = 1;

my $api_key = $ENV{"OPENAI_API_KEY"} or die "OPENAI_API_KEY is not set\n";

my $client = OpenAPI::Client::OpenAI->new;

my $prompt = join " ",

'Produce a 100-200 word description for the book',

"'$title' by $author",

'Do not mention the fact that the book was short-listed for (or won)',

'the Booker Prize';

my $res = $client->createChatCompletion({

body => {

model => 'gpt-4o',

# model => 'gpt-4.1-nano',

messages => [

{ role => 'system', content => 'You are someone who knows a lot about popular literature.' },

{ role => 'user', content => $prompt },

],

temperature => 1.0,

},

});

my $text = $res->res->json->{choices}[0]{message}{content};

$text = encode('UTF-8', $text);

say $text if $debug;

return $text;

}

There are a couple of points to note:

- I have DBIC classes to deal with the database interaction, so that’s all really simple. Before running this code, I added new columns to the relevant tables and re-ran my process for generating the DBIC classes

- I put a throttle on the processing, so each run would only update twenty books – I slightly paranoid about using too many requests and annoying OpenAI. That wasn’t a problem at all

- The hardest thing (not that it was very hard at all) was to tweak the prompt to give me exactly what I wanted

I then produced a similar program that did the same thing for authors. It’s similar enough that the next time I need something like this, I’ll spend some time turning it into a generic program.

I then added the new database fields to the book and author templates and re-published the site. You can see the results in, for example, the pages for Salman Rushie and Midnight’s Children.

I had one more slight concern going into this project. I pay for access to the ChatGPT API. I usually have about $10 in my pre-paid account and I really had no idea how much this was going to cost me. I needed have worried. Here’s a graph showing the bump in my API usage on the day I ran the code for all books and authors:

But you can also see that my total costs for the month so far are $0.01!

So, all-in-all, I call that a success and I’ll be using similar techniques to generate content for some other websites.

The post Generating Content with ChatGPT first appeared on Perl Hacks.

Last summer, I wrote a couple of posts about my lightweight, roll-your-own approach to deploying PSGI (Dancer) web apps:

In those posts, I described how I avoided heavyweight deployment tools by writing a small, custom Perl script (app_service) to start and manage them. It was minimal, transparent, and easy to replicate.

It also wasn’t great.

What Changed?

The system mostly worked, but it had a number of growing pains:

- It didn’t integrate with the host operating system in a meaningful way.

- Services weren’t resilient — no automatic restarts on failure.

- There was no logging consolidation, no dependency management (e.g., waiting for the network), and no visibility in tools like

systemctl. - If a service crashed, I’d usually find out via

curl, notjournalctl.

As I started running more apps, this ad-hoc approach became harder to justify. It was time to grow up.

Enter psgi-systemd-deploy

So today (with some help from ChatGPT) I wrote psgi-systemd-deploy — a simple, declarative deployment tool for PSGI apps that integrates directly with systemd. It generates .service files for your apps from environment-specific config and handles all the fiddly bits (paths, ports, logging, restart policies, etc.) with minimal fuss.

Key benefits:

-

- Declarative config via

.deploy.env - Optional

.envfile support for application-specific settings - Environment-aware templating using

envsubst - No lock-in — it just writes

systemdunits you can inspect and manage yourself

- Declarative config via

- Safe — supports a

--dry-runmode so you can preview changes before deploying - Convenient — includes a

run_allhelper script for managing all your deployed apps with one command

A Real-World Example

You may know about my Line of Succession web site (introductory talk). This is one of the Dancer apps I’ve been talking about. To deploy it, I wrote a .deploy.env file that looks like this:

WEBAPP_SERVICE_NAME=succession WEBAPP_DESC="British Line of Succession" WEBAPP_WORKDIR=/opt/succession WEBAPP_USER=succession WEBAPP_GROUP=psacln WEBAPP_PORT=2222 WEBAPP_WORKER_COUNT=5 WEBAPP_APP_PRELOAD=1

And optionally a .env file for app-specific settings (e.g., database credentials). Then I run:

$ /path/to/psgi-systemd-deploy/deploy.sh

And that’s it. The app is now a first-class systemd service, automatically started on boot and restartable with systemctl.

Managing All Your Apps with run_all

Once you’ve deployed several PSGI apps using psgi-systemd-deploy, you’ll probably want an easy way to manage them all at once. That’s where the run_all script comes in.

It’s a simple but powerful wrapper around systemctl that automatically discovers all deployed services by scanning for .deploy.env files. That means no need to hard-code service names or paths — it just works, based on the configuration you’ve already provided.

Here’s how you might use it:

# Restart all PSGI apps $ run_all restart # Show current status $ run_all status # Stop them all (e.g., for maintenance) $ run_all stop

And if you want machine-readable output for scripting or monitoring, there’s a --json flag:

$ run_all --json is-active | jq .

[

{

"service": "succession.service",

"action": "is-active",

"status": 0,

"output": "active"

},

{

"service": "klortho.service",

"action": "is-active",

"status": 0,

"output": "active"

}

]Under the hood, run_all uses the same environment-driven model as the rest of the system — no surprises, no additional config files. It’s just a lightweight helper that understands your layout and automates the boring bits.

It’s not a replacement for systemctl, but it makes common tasks across many services far more convenient — especially during development, deployment, or server reboots.

A Clean Break

The goal of psgi-systemd-deploy isn’t to replace Docker, K8s, or full-featured PaaS systems. It’s for the rest of us — folks running VPSes or bare-metal boxes where PSGI apps just need to run reliably and predictably under the OS’s own tools.

If you’ve been rolling your own init scripts, cron jobs, or nohup-based hacks, give it a look. It’s clean, simple, and reliable — and a solid step up from duct tape.

The post Deploying Dancer Apps – The Next Generation first appeared on Perl Hacks.

Last summer, I wrote a couple of posts about my lightweight, roll-your-own approach to deploying PSGI (Dancer) web apps:

In those posts, I described how I avoided heavyweight deployment tools by writing a small, custom Perl script (app_service) to start and manage them. It was minimal, transparent, and easy to replicate.

It also wasn’t great.

What Changed?

The system mostly worked, but it had a number of growing pains:

- It didn’t integrate with the host operating system in a meaningful way.

- Services weren’t resilient — no automatic restarts on failure.

- There was no logging consolidation, no dependency management (e.g., waiting for the network), and no visibility in tools like

systemctl. - If a service crashed, I’d usually find out via

curl, notjournalctl.

As I started running more apps, this ad-hoc approach became harder to justify. It was time to grow up.

Enter psgi-systemd-deploy

So today (with some help from ChatGPT) I wrote psgi-systemd-deploy — a simple, declarative deployment tool for PSGI apps that integrates directly with systemd. It generates .service files for your apps from environment-specific config and handles all the fiddly bits (paths, ports, logging, restart policies, etc.) with minimal fuss.

Key benefits:

-

Declarative config via

.deploy.env -

Optional

.envfile support for application-specific settings -

Environment-aware templating using

envsubst -

No lock-in — it just writes

systemdunits you can inspect and manage yourself

-

Declarative config via

Safe — supports a

--dry-runmode so you can preview changes before deployingConvenient — includes a

run_allhelper script for managing all your deployed apps with one command

A Real-World Example

You may know about my Line of Succession web site (introductory talk). This is one of the Dancer apps I’ve been talking about. To deploy it, I wrote a .deploy.env file that looks like this:

WEBAPP_SERVICE_NAME=succession

WEBAPP_DESC="British Line of Succession"

WEBAPP_WORKDIR=/opt/succession

WEBAPP_USER=succession

WEBAPP_GROUP=psacln

WEBAPP_PORT=2222

WEBAPP_WORKER_COUNT=5

WEBAPP_APP_PRELOAD=1

And optionally a .env file for app-specific settings (e.g., database credentials). Then I run:

$ /path/to/psgi-systemd-deploy/deploy.sh

And that’s it. The app is now a first-class systemd service, automatically started on boot and restartable with systemctl.

Managing All Your Apps with run_all

Once you’ve deployed several PSGI apps using psgi-systemd-deploy, you’ll probably want an easy way to manage them all at once. That’s where the run_all script comes in.

It’s a simple but powerful wrapper around systemctl that automatically discovers all deployed services by scanning for .deploy.env files. That means no need to hard-code service names or paths — it just works, based on the configuration you’ve already provided.

Here’s how you might use it:

# Restart all PSGI apps

$ run_all restart

# Show current status

$ run_all status

# Stop them all (e.g., for maintenance)

$ run_all stop

And if you want machine-readable output for scripting or monitoring, there’s a --json flag:

$ run_all --json is-active | jq .

[

{

"service": "succession.service",

"action": "is-active",

"status": 0,

"output": "active"

},

{

"service": "klortho.service",

"action": "is-active",

"status": 0,

"output": "active"

}

]

Under the hood, run_all uses the same environment-driven model as the rest of the system — no surprises, no additional config files. It’s just a lightweight helper that understands your layout and automates the boring bits.

It’s not a replacement for systemctl, but it makes common tasks across many services far more convenient — especially during development, deployment, or server reboots.

A Clean Break

The goal of psgi-systemd-deploy isn’t to replace Docker, K8s, or full-featured PaaS systems. It’s for the rest of us — folks running VPSes or bare-metal boxes where PSGI apps just need to run reliably and predictably under the OS’s own tools.

If you’ve been rolling your own init scripts, cron jobs, or nohup-based hacks, give it a look. It’s clean, simple, and reliable — and a solid step up from duct tape.

The post Deploying Dancer Apps – The Next Generation first appeared on Perl Hacks.

Like most developers, I have a mental folder labelled “useful little tools I’ll probably never build.” Small utilities, quality-of-life scripts, automations — they’d save time, but not enough to justify the overhead of building them. So they stay stuck in limbo.

That changed when I started using AI as a regular part of my development workflow.

Now, when I hit one of those recurring minor annoyances — something just frictiony enough to slow me down — I open a ChatGPT tab. Twenty minutes later, I usually have a working solution. Not always perfect, but almost always 90% of the way there. And once that initial burst of momentum is going, finishing it off is easy.

It’s not quite mind-reading. But it is like having a superpowered pair programmer on tap.

The Problem

Obviously, I do a lot of Perl development. When working on a Perl project, it’s common to have one or more lib/ directories in the repo that contain the project’s modules. To run test scripts or local tools, I often need to set the PERL5LIB environment variable so that Perl can find those modules.

But I’ve got a lot of Perl projects — often nested in folders like ~/git, and sometimes with extra lib/ directories for testing or shared code. And I switch between them frequently. Typing:

export PERL5LIB=lib

…over and over gets boring fast. And worse, if you forget to do it, your test script breaks with a misleading “Can’t locate Foo/Bar.pm” error.

What I wanted was this:

-

Every time I

cdinto a directory, if there are any validlib/subdirectories beneath it, setPERL5LIBautomatically. -

Only include

lib/dirs that actually contain.pmfiles. -

Skip junk like

.vscode,blib, and old release folders likeMyModule-1.23/. -

Don’t scan the entire world if I

cd ~/git, which contains hundreds of repos. -

Show me what it’s doing, and let me test it in dry-run mode.

The Solution

With ChatGPT, I built a drop-in Bash function in about half an hour that does exactly that. It’s now saved as perl5lib_auto.sh, and it:

-

Wraps

cd()to trigger a scan after every directory change -

Finds all qualifying

lib/directories beneath the current directory -

Filters them using simple rules:

-

Must contain

.pmfiles -

Must not be under

.vscode/,.blib/, or versioned build folders

-

-

Excludes specific top-level directories (like

~/git) by default -

Lets you configure everything via environment variables

-

Offers

verbose,dry-run, andforcemodes -

Can append to or overwrite your existing

PERL5LIB

You drop it in your ~/.bashrc (or wherever you like), and your shell just becomes a little bit smarter.

Usage Example

source ~/bin/perl5lib_auto.sh cd ~/code/MyModule # => PERL5LIB set to: /home/user/code/MyModule/lib PERL5LIB_VERBOSE=1 cd ~/code/AnotherApp # => [PERL5LIB] Found 2 eligible lib dir(s): # => /home/user/code/AnotherApp/lib # => /home/user/code/AnotherApp/t/lib # => PERL5LIB set to: /home/user/code/AnotherApp/lib:/home/user/code/AnotherApp/t/lib

You can also set environment variables to customise behaviour:

export PERL5LIB_EXCLUDE_DIRS="$HOME/git:$HOME/legacy" export PERL5LIB_EXCLUDE_PATTERNS=".vscode:blib" export PERL5LIB_LIB_CAP=5 export PERL5LIB_APPEND=1

Or simulate what it would do:

PERL5LIB_DRYRUN=1 cd ~/code/BigProject

Try It Yourself

The full script is available on GitHub:

https://github.com/davorg/perl5lib_auto

https://github.com/davorg/perl5lib_auto

I’d love to hear how you use it — or how you’d improve it. Feel free to:

-

Star the repo

Star the repo -

Open issues for suggestions or bugs

Open issues for suggestions or bugs -

Send pull requests with fixes, improvements, or completely new ideas

Send pull requests with fixes, improvements, or completely new ideas

It’s a small tool, but it’s already saved me a surprising amount of friction. If you’re a Perl hacker who jumps between projects regularly, give it a try — and maybe give AI co-coding a try too while you’re at it.

What useful little utilities have you written with help from an AI pair-programmer?

The post Turning AI into a Developer Superpower: The PERL5LIB Auto-Setter first appeared on Perl Hacks.

Like most developers, I have a mental folder labelled “useful little tools I’ll probably never build.” Small utilities, quality-of-life scripts, automations — they’d save time, but not enough to justify the overhead of building them. So they stay stuck in limbo.

That changed when I started using AI as a regular part of my development workflow.

Now, when I hit one of those recurring minor annoyances — something just frictiony enough to slow me down — I open a ChatGPT tab. Twenty minutes later, I usually have a working solution. Not always perfect, but almost always 90% of the way there. And once that initial burst of momentum is going, finishing it off is easy.

It’s not quite mind-reading. But it is like having a superpowered pair programmer on tap.

The Problem

Obviously, I do a lot of Perl development. When working on a Perl project, it’s common to have one or more lib/ directories in the repo that contain the project’s modules. To run test scripts or local tools, I often need to set the PERL5LIB environment variable so that Perl can find those modules.

But I’ve got a lot of Perl projects — often nested in folders like ~/git, and sometimes with extra lib/ directories for testing or shared code. And I switch between them frequently. Typing:

export PERL5LIB=lib

…over and over gets boring fast. And worse, if you forget to do it, your test script breaks with a misleading “Can’t locate Foo/Bar.pm” error.

What I wanted was this:

Every time I

cdinto a directory, if there are any validlib/subdirectories beneath it, setPERL5LIBautomatically.Only include

lib/dirs that actually contain.pmfiles.Skip junk like

.vscode,blib, and old release folders likeMyModule-1.23/.Don’t scan the entire world if I

cd ~/git, which contains hundreds of repos.Show me what it’s doing, and let me test it in dry-run mode.

The Solution

With ChatGPT, I built a drop-in Bash function in about half an hour that does exactly that. It’s now saved as perl5lib_auto.sh, and it:

Wraps

cd()to trigger a scan after every directory changeFinds all qualifying

lib/directories beneath the current directoryFilters them using simple rules:

Excludes specific top-level directories (like

~/git) by defaultLets you configure everything via environment variables

Offers

verbose,dry-run, andforcemodesCan append to or overwrite your existing

PERL5LIB

You drop it in your ~/.bashrc (or wherever you like), and your shell just becomes a little bit smarter.

Usage Example

source ~/bin/perl5lib_auto.sh

cd ~/code/MyModule

# => PERL5LIB set to: /home/user/code/MyModule/lib

PERL5LIB_VERBOSE=1 cd ~/code/AnotherApp

# => [PERL5LIB] Found 2 eligible lib dir(s):

# => /home/user/code/AnotherApp/lib

# => /home/user/code/AnotherApp/t/lib

# => PERL5LIB set to: /home/user/code/AnotherApp/lib:/home/user/code/AnotherApp/t/lib

You can also set environment variables to customise behaviour:

export PERL5LIB_EXCLUDE_DIRS="$HOME/git:$HOME/legacy"

export PERL5LIB_EXCLUDE_PATTERNS=".vscode:blib"

export PERL5LIB_LIB_CAP=5

export PERL5LIB_APPEND=1

Or simulate what it would do:

PERL5LIB_DRYRUN=1 cd ~/code/BigProject

Try It Yourself

The full script is available on GitHub:

I’d love to hear how you use it — or how you’d improve it. Feel free to:

⭐ Star the repo

🐛 Open issues for suggestions or bugs

🔀 Send pull requests with fixes, improvements, or completely new ideas

It’s a small tool, but it’s already saved me a surprising amount of friction. If you’re a Perl hacker who jumps between projects regularly, give it a try — and maybe give AI co-coding a try too while you’re at it.

What useful little utilities have you written with help from an AI pair-programmer?

The post Turning AI into a Developer Superpower: The PERL5LIB Auto-Setter first appeared on Perl Hacks.

Building a website in a day — with help from ChatGPT

A few days ago, I looked at an unused domain I owned — balham.org — and thought: “There must be a way to make this useful… and maybe even make it pay for itself.”

So I set myself a challenge: one day to build something genuinely useful. A site that served a real audience (people in and around Balham), that was fun to build, and maybe could be turned into a small revenue stream.

It was also a great excuse to get properly stuck into Jekyll and the Minimal Mistakes theme — both of which I’d dabbled with before, but never used in anger. And, crucially, I wasn’t working alone: I had ChatGPT as a development assistant, sounding board, researcher, and occasional bug-hunter.

The Idea

Balham is a reasonably affluent, busy part of south west London. It’s full of restaurants, cafés, gyms, independent shops, and people looking for things to do. It also has a surprisingly rich local history — from Victorian grandeur to Blitz-era tragedy.

I figured the site could be structured around three main pillars:

Throw in a curated homepage and maybe a blog later, and I had the bones of a useful site. The kind of thing that people would find via Google or get sent a link to by a friend.

The Stack

I wanted something static, fast, and easy to deploy. My toolchain ended up being:

- Jekyll for the site generator

- Minimal Mistakes as the theme

- GitHub Pages for hosting

- Custom YAML data files for businesses and events

- ChatGPT for everything from content generation to Liquid loops

The site is 100% static, with no backend, no databases, no CMS. It builds automatically on GitHub push, and is entirely hosted via GitHub Pages.

Step by Step: Building It

I gave us about six solid hours to build something real. Here’s what we did (“we” meaning me + ChatGPT):

1. Domain Setup and Scaffolding

The domain was already pointed at GitHub Pages, and I had a basic “Hello World” site in place. We cleared that out, set up a fresh Jekyll repo, and added a _config.yml that pointed at the Minimal Mistakes remote theme. No cloning or submodules.

2. Basic Site Structure

We decided to create four main pages:

We used the layout: single layout provided by Minimal Mistakes, and created custom permalinks so URLs were clean and extension-free.

3. The Business Directory

This was built from scratch using a YAML data file (_data/businesses.yml). ChatGPT gathered an initial list of 20 local businesses (restaurants, shops, pubs, etc.), checked their status, and added details like name, category, address, website, and a short description.

In the template, we looped over the list, rendered sections with conditional logic (e.g., don’t output the website link if it’s empty), and added anchor IDs to each entry so we could link to them directly from the homepage.

4. The Events Page

Built exactly the same way, but using _data/events.yml. To keep things realistic, we seeded a small number of example events and included a note inviting people to email us with new submissions.

5. Featured Listings

We wanted the homepage to show a curated set of businesses and events. So we created a third data file, _data/featured.yml, which just listed the names of the featured entries. Then in the homepage template, we used where and slugify to match names and pull in the full record from businesses.yml or events.yml. Super DRY.

6. Map and Media

We added a map of Balham as a hero image, styled responsively. Later we created a .responsive-inline-image class to embed supporting images on the history page without overwhelming the layout.

7. History Section with Real Archival Images

This turned out to be one of the most satisfying parts. We wrote five paragraphs covering key moments in Balham’s development — Victorian expansion, Du Cane Court, The Priory, the Blitz, and modern growth.

Then we sourced five CC-licensed or public domain images (from Wikimedia Commons and Geograph) to match each paragraph. Each was wrapped in a <figure> with proper attribution and a consistent CSS class. The result feels polished and informative.

8. Metadata, SEO, and Polish

We went through all the basics:

- Custom title and description in front matter for each page

- Open Graph tags and Twitter cards via site config

- A branded favicon using RealFaviconGenerator

- Added robots.txt, sitemap.xml, and a hand-crafted humans.txt

- Clean URLs, no .html extensions

- Anchored IDs for deep linking

9. Analytics and Search Console

We added GA4 tracking using Minimal Mistakes’ built-in support, and verified the domain with Google Search Console. A sitemap was submitted, and indexing kicked in within minutes.

10. Accessibility and Performance

We ran Lighthouse and WAVE tests. Accessibility came out at 100%. Performance dipped slightly due to Google Fonts and image size, but we did our best to optimise without sacrificing aesthetics.

11. Footer CTA

We added a site-wide footer call-to-action inviting people to email us with suggestions for businesses or events. This makes the site feel alive and participatory, even without a backend form.

What Worked Well

- ChatGPT as co-pilot: I could ask it for help with Liquid templates, CSS, content rewrites, and even bug-hunting. It let me move fast without getting bogged down in docs.

- Minimal Mistakes: It really is an excellent theme. Clean, accessible, flexible.

- Data-driven content: Keeping everything in YAML meant templates stayed simple, and the whole site is easy to update.

- Staying focused: We didn’t try to do everything. Four pages, one day, good polish.

What’s Next?

- Add category filtering to the directory

- Improve the OG/social card image

- Add structured JSON-LD for individual events and businesses

- Explore monetisation: affiliate links, sponsored listings, local partnerships

- Start some blog posts or “best of Balham” roundups

Final Thoughts

This started as a fun experiment: could I monetise an unused domain and finally learn Jekyll properly?

What I ended up with is a genuinely useful local resource — one that looks good, loads quickly, and has room to grow.

If you’re sitting on an unused domain, and you’ve got a free day and a chatbot at your side — you might be surprised what you can build.

Oh, and one final thing — obviously you can also get ChatGPT to write a blog post talking about the project :-)

Originally published at https://blog.dave.org.uk on March 23, 2025.

A few days ago, I looked at an unused domain I owned — balham.org — and thought: “There must be a way to make this useful… and maybe even make it pay for itself.”

So I set myself a challenge: one day to build something genuinely useful. A site that served a real audience (people in and around Balham), that was fun to build, and maybe could be turned into a small revenue stream.

It was also a great excuse to get properly stuck into Jekyll and the Minimal Mistakes theme — both of which I’d dabbled with before, but never used in anger. And, crucially, I wasn’t working alone: I had ChatGPT as a development assistant, sounding board, researcher, and occasional bug-hunter.

The Idea

Balham is a reasonably affluent, busy part of south west London. It’s full of restaurants, cafés, gyms, independent shops, and people looking for things to do. It also has a surprisingly rich local history — from Victorian grandeur to Blitz-era tragedy.

I figured the site could be structured around three main pillars:

- A directory of local businesses

- A list of upcoming events

- A local history section

Throw in a curated homepage and maybe a blog later, and I had the bones of a useful site. The kind of thing that people would find via Google or get sent a link to by a friend.

The Stack

I wanted something static, fast, and easy to deploy. My toolchain ended up being:

- Jekyll for the site generator

- Minimal Mistakes as the theme

- GitHub Pages for hosting

- Custom YAML data files for businesses and events

- ChatGPT for everything from content generation to Liquid loops

The site is 100% static, with no backend, no databases, no CMS. It builds automatically on GitHub push, and is entirely hosted via GitHub Pages.

Step by Step: Building It

I gave us about six solid hours to build something real. Here’s what we did (“we” meaning me + ChatGPT):

1. Domain Setup and Scaffolding

The domain was already pointed at GitHub Pages, and I had a basic “Hello World” site in place. We cleared that out, set up a fresh Jekyll repo, and added a _config.yml that pointed at the Minimal Mistakes remote theme. No cloning or submodules.

2. Basic Site Structure

We decided to create four main pages:

- Homepage (

index.md) - Directory (

directory/index.md) - Events (

events/index.md) - History (

history/index.md)

We used the layout: single layout provided by Minimal Mistakes, and created custom permalinks so URLs were clean and extension-free.

3. The Business Directory

This was built from scratch using a YAML data file (_data/businesses.yml). ChatGPT gathered an initial list of 20 local businesses (restaurants, shops, pubs, etc.), checked their status, and added details like name, category, address, website, and a short description.

In the template, we looped over the list, rendered sections with conditional logic (e.g., don’t output the website link if it’s empty), and added anchor IDs to each entry so we could link to them directly from the homepage.

4. The Events Page

Built exactly the same way, but using _data/events.yml. To keep things realistic, we seeded a small number of example events and included a note inviting people to email us with new submissions.

5. Featured Listings

We wanted the homepage to show a curated set of businesses and events. So we created a third data file, _data/featured.yml, which just listed the names of the featured entries. Then in the homepage template, we used where and slugify to match names and pull in the full record from businesses.yml or events.yml. Super DRY.

6. Map and Media

We added a map of Balham as a hero image, styled responsively. Later we created a .responsive-inline-image class to embed supporting images on the history page without overwhelming the layout.

7. History Section with Real Archival Images

This turned out to be one of the most satisfying parts. We wrote five paragraphs covering key moments in Balham’s development — Victorian expansion, Du Cane Court, The Priory, the Blitz, and modern growth.

Then we sourced five CC-licensed or public domain images (from Wikimedia Commons and Geograph) to match each paragraph. Each was wrapped in a <figure> with proper attribution and a consistent CSS class. The result feels polished and informative.

8. Metadata, SEO, and Polish

We went through all the basics:

- Custom

titleanddescriptionin front matter for each page - Open Graph tags and Twitter cards via site config

- A branded favicon using RealFaviconGenerator

- Added

robots.txt,sitemap.xml, and a hand-craftedhumans.txt - Clean URLs, no

.htmlextensions - Anchored IDs for deep linking

9. Analytics and Search Console

We added GA4 tracking using Minimal Mistakes’ built-in support, and verified the domain with Google Search Console. A sitemap was submitted, and indexing kicked in within minutes.

10. Accessibility and Performance

We ran Lighthouse and WAVE tests. Accessibility came out at 100%. Performance dipped slightly due to Google Fonts and image size, but we did our best to optimise without sacrificing aesthetics.

11. Footer CTA

We added a site-wide footer call-to-action inviting people to email us with suggestions for businesses or events. This makes the site feel alive and participatory, even without a backend form.

What Worked Well

- ChatGPT as co-pilot: I could ask it for help with Liquid templates, CSS, content rewrites, and even bug-hunting. It let me move fast without getting bogged down in docs.

- Minimal Mistakes: It really is an excellent theme. Clean, accessible, flexible.

- Data-driven content: Keeping everything in YAML meant templates stayed simple, and the whole site is easy to update.

- Staying focused: We didn’t try to do everything. Four pages, one day, good polish.

What’s Next?

- Add category filtering to the directory

- Improve the OG/social card image

- Add structured JSON-LD for individual events and businesses

- Explore monetisation: affiliate links, sponsored listings, local partnerships

- Start some blog posts or “best of Balham” roundups

Final Thoughts

This started as a fun experiment: could I monetise an unused domain and finally learn Jekyll properly?

What I ended up with is a genuinely useful local resource — one that looks good, loads quickly, and has room to grow.

If you’re sitting on an unused domain, and you’ve got a free day and a chatbot at your side — you might be surprised what you can build.

Oh, and one final thing – obviously you can also get ChatGPT to write a blog post talking about the project :-)

The post Building a website in a day — with help from ChatGPT appeared first on Davblog.

I built and launched a new website yesterday. It wasn’t what I planned to do, but the idea popped into my head while I was drinking my morning coffee on Clapham Common and it seemed to be the kind of thing I could complete in a day — so I decided to put my original plans on hold and built it instead.

The website is aimed at small business owners who think they need a website (or want to update their existing one) but who know next to nothing about web development and can easily fall prey to the many cowboy website companies that seem to dominate the “making websites for small companies” section of our industries. The site is structured around a number of questions you can ask a potential website builder to try and weed out the dodgier elements.

I’m not really in that sector of our industry. But while writing the content for that site, it occurred to me that some people might be interested in the tools I use to build sites like this.

Content

I generally build websites about topics that I’m interested in and, therefore, know a fair bit about. But I probably don’t know everything about these subjects. So I’ll certainly brainstorm some ideas with ChatGPT. And, once I’ve written something, I’ll usually run it through ChatGPT again to proofread it. I consider myself a pretty good writer, but it’s embarrassing how often ChatGPT catches obvious errors.

I’ve used DALL-E (via ChatGPT) for a lot of image generation. This weekend, I subscribed to Midjourney because I heard it was better at generating images that include text. So far, that seems to be accurate.

Technology

I don’t write much raw HTML these days. I’ll generally write in Markdown and use a static site generator to turn that into a real website. This weekend I took the easy route and used Jekyll with the Minimal Mistakes theme. Honestly, I don’t love Jekyll, but it integrates well with GitHub Pages and I can usually get it to do what I want — with a combination of help from ChatGPT and reading the source code. I’m (slowly) building my own Static Site Generator ( Aphra) in Perl. But, to be honest, I find that when I use it I can easily get distracted by adding new features rather than getting the site built.

As I’ve hinted at, if I’m building a static site (and, it’s surprising how often that’s the case), it will be hosted on GitHub Pages. It’s not really aimed at end-users, but I know to you use it pretty well now. This weekend, I used the default mechanism that regenerates the site (using Jekyll) on every commit. But if I’m using Aphra or a custom site generator, I know I can use GitHub Actions to build and deploy the site.

If I’m writing actual HTML, then I’m old-skool enough to still use Bootstrap for CSS. There’s probably something better out there now, but I haven’t tried to work out what it is (feel free to let me know in the comments).

For a long while, I used jQuery to add Javascript to my pages — until someone was kind enough to tell me that vanilla Javascript had mostly caught up and jQuery was no longer necessary. I understand Javascript. And with help from GitHub Copilot, I can usually get it doing what I want pretty quickly.

SEO

Many years ago, I spent a couple of years working in the SEO group at Zoopla. So, now, I can’t think about building a website without considering SEO.

I quickly lose interest in the content side of SEO. Figuring out what my keywords are and making sure they’re scattered through the content at the correct frequency, feels like it stifles my writing (maybe that’s an area where ChatGPT can help) but I enjoy Technical SEO. So I like to make sure that all of my pages contain the correct structured data (usually JSON-LD). I also like to ensure my sites all have useful OpenGraph headers. This isn’t really SEO, I guess, but these headers control what people see when they share content on social media. So by making that as attractive as possible (a useful title and description, an attractive image) it encourages more sharing, which increases your site’s visibility and, in around about way, improves SEO.

I like to register all of my sites with Ahrefs — they will crawl my sites periodically and send me a long list of SEO improvements I can make.

Monitoring

I add Google Analytics to all of my sites. That’s still the best way to find out how popular your site it and where your traffic is coming from. I used to be quite proficient with Universal Analytics, but I must admit I haven’t fully got the hang of Google Analytics 4 yet-so I’m probably only scratching the surface of what it can do.

I also register all of my sites with Google Search Console. That shows me information about how my site appears in the Google Search Index. I also link that to Google Analytics — so GA also knows what searches brought people to my sites.

Conclusion

I think that covers everything-though I’ve probably forgotten something. It might sound like a lot, but once you get into a rhythm, adding these extra touches doesn’t take long. And the additional insights you gain make it well worth the effort.

If you’ve built a website recently, I’d love to hear about your approach. What tools and techniques do you swear by? Are there any must-have features or best practices I’ve overlooked? Drop a comment below or get in touch-I’m always keen to learn new tricks and refine my process. And if you’re a small business owner looking for guidance on choosing a web developer, check out my new site-it might just save you from a costly mistake!

Originally published at https://blog.dave.org.uk on March 16, 2025.

I built and launched a new website yesterday. It wasn’t what I planned to do, but the idea popped into my head while I was drinking my morning coffee on Clapham Common and it seemed to be the kind of thing I could complete in a day – so I decided to put my original plans on hold and built it instead.

The website is aimed at small business owners who think they need a website (or want to update their existing one) but who know next to nothing about web development and can easily fall prey to the many cowboy website companies that seem to dominate the “making websites for small companies” section of our industries. The site is structured around a number of questions you can ask a potential website builder to try and weed out the dodgier elements.

I’m not really in that sector of our industry. But while writing the content for that site, it occurred to me that some people might be interested in the tools I use to build sites like this.

Content

I generally build websites about topics that I’m interested in and, therefore, know a fair bit about. But I probably don’t know everything about these subjects. So I’ll certainly brainstorm some ideas with ChatGPT. And, once I’ve written something, I’ll usually run it through ChatGPT again to proofread it. I consider myself a pretty good writer, but it’s embarrassing how often ChatGPT catches obvious errors.

I’ve used DALL-E (via ChatGPT) for a lot of image generation. This weekend, I subscribed to Midjourney because I heard it was better at generating images that include text. So far, that seems to be accurate.

Technology

I don’t write much raw HTML these days. I’ll generally write in Markdown and use a static site generator to turn that into a real website. This weekend I took the easy route and used Jekyll with the Minimal Mistakes theme. Honestly, I don’t love Jekyll, but it integrates well with GitHub Pages and I can usually get it to do what I want – with a combination of help from ChatGPT and reading the source code. I’m (slowly) building my own Static Site Generator (Aphra) in Perl. But, to be honest, I find that when I use it I can easily get distracted by adding new features rather than getting the site built.

As I’ve hinted at, if I’m building a static site (and, it’s surprising how often that’s the case), it will be hosted on GitHub Pages. It’s not really aimed at end-users, but I know how to use it pretty well now. This weekend, I used the default mechanism that regenerates the site (using Jekyll) on every commit. But if I’m using Aphra or a custom site generator, I know I can use GitHub Actions to build and deploy the site.

If I’m writing actual HTML, then I’m old-skool enough to still use Bootstrap for CSS. There’s probably something better out there now, but I haven’t tried to work out what it is (feel free to let me know in the comments).

For a long while, I used jQuery to add Javascript to my pages – until someone was kind enough to tell me that vanilla Javascript had mostly caught up and jQuery was no longer necessary. I understand Javascript. And with help from GitHub Copilot, I can usually get it doing what I want pretty quickly.

SEO

Many years ago, I spent a couple of years working in the SEO group at Zoopla. So, now, I can’t think about building a website without considering SEO.

I quickly lose interest in the content side of SEO. Figuring out what my keywords are and making sure they’re scattered through the content at the correct frequency, feels like it stifles my writing (maybe that’s an area where ChatGPT can help) but I enjoy Technical SEO. So I like to make sure that all of my pages contain the correct structured data (usually JSON-LD). I also like to ensure my sites all have useful OpenGraph headers. This isn’t really SEO, I guess, but these headers control what people see when they share content on social media. So by making that as attractive as possible (a useful title and description, an attractive image) it encourages more sharing, which increases your site’s visibility and, in around about way, improves SEO.

I like to register all of my sites with Ahrefs – they will crawl my sites periodically and send me a long list of SEO improvements I can make.

Monitoring

I add Google Analytics to all of my sites. That’s still the best way to find out how popular your site it and where your traffic is coming from. I used to be quite proficient with Universal Analytics, but I must admit I haven’t fully got the hang of Google Analytics 4 yet—so I’m probably only scratching the surface of what it can do.

I also register all of my sites with Google Search Console. That shows me information about how my site appears in the Google Search Index. I also link that to Google Analytics – so GA also knows what searches brought people to my sites.

Conclusion

I think that covers everything—though I’ve probably forgotten something. It might sound like a lot, but once you get into a rhythm, adding these extra touches doesn’t take long. And the additional insights you gain make it well worth the effort.

If you’ve built a website recently, I’d love to hear about your approach. What tools and techniques do you swear by? Are there any must-have features or best practices I’ve overlooked? Drop a comment below or get in touch—I’m always keen to learn new tricks and refine my process. And if you’re a small business owner looking for guidance on choosing a web developer, check out my new site—it might just save you from a costly mistake!

The post How I build websites in 2025 appeared first on Davblog.

I’ve been a member of Picturehouse Cinemas for something approaching twenty years. It costs about £60 a year and for that, you get five…

I’ve been a member of Picturehouse Cinemas for something approaching twenty years. It costs about £60 a year and for that, you get five free tickets and discounts on your tickets and snacks. I’ve often wondered whether it’s worth paying for, but in the last couple of years, they’ve added an extra feature that makes it well worth the cost. It’s called Film Club and every week they have two curated screenings that members can see for just £1. On Sunday lunchtime, there’s a screening of an older film, and on a weekday evening (usually Wednesday at the Clapham Picturehouse), they show something new. I’ve got into the habit of seeing most of these screenings.

For most of the year, I’ve been considering a monthly post about the films I’ve seen at Film Club, but I’ve never got around to it. So, instead, you get an end-of-year dump of the almost eighty films I’ve seen.

- Under the Skin [4 stars] 2024-01-14

Starting with an old(ish) favourite. The last time I saw this was a free preview for Picturehouse members, ten years ago. It’s very much a film that people love or hate. I love it. The book is great too (but very different) - Go West [3.5] 2024-01-21

They often show old films as mini-festivals of connected films. This was the first of a short series of Buster Keaton films. I hadn’t seen any of them. Go West was a film where I could appreciate the technical aspects, but I wasn’t particularly entertained - Godzilla Minus One [3] 2024-01-23